I'm telling you it's that flaky spec again!

We sometimes rush to put labels on stuff. Are a couple of retries enough to characterize an E2E spec flaky??? Shame on you..!

Case study

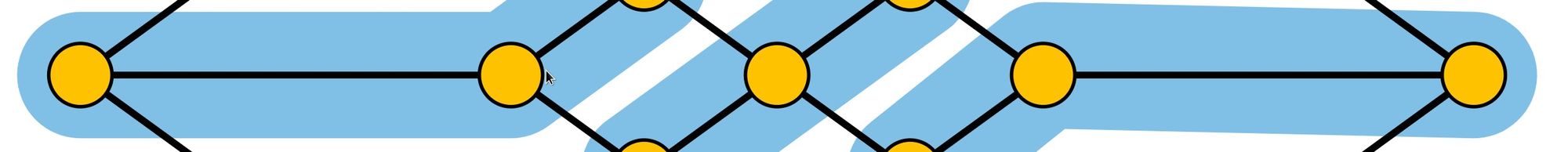

Let's try to break an E2E spec run down to its atoms. Its (over simplified) core components for our study are the following.

- A (headless) web browser.

- A scenario of clicks/scrolls/inputs and all sorts of user interactions with a web application.

- A network through which the browser sends its requests and communicates with a test web server.

- A Kubernetes cluster.

- A pod for executing the headless browser.

- A pod that hosts the test web server.

- Pods that host other stateful services like a database, cache or a stateless background worker [6].

If you don't use Kubernetes the same concepts apply to you also. A pod could be a single container and instead of using Kubernetes you could orchestrate your containers any way you see fit.

Oversimplified definitions

Flaky spec

A spec that seems to be failing randomly but regularly.

Web browser

A web browser that is used by automation software like Selenium or Cypress [5]. In headless mode you don't see the browser itself but you can create video recordings or screenshots as you would in normal mode. This will come handy when debugging spec failures. Headless of course is the mode you'll be using on your CI setup.

Scenario

Write your scenario in any DSL you like, overall this scenario will jump through hoops to achieve your goals [4].

Keep in mind that E2E is the only suite that is using the system as a whole, interacting through a UI to change the application state and validate the state change again from a UI repaint or a http redirection. With a more realistic testing scheme you have more area to cover so naturally more errors can bubble up in the process.

Network

A web browser works over a network whether we like it or not. A network partition or a slow network is not only acceptable but a reality, with slowness being the side effect of so many things outside of the cable itself. You can test slow even if your cables are fast.

Kubernetes

You have a bunch of machines (bare metal servers, VMs) and you need a way to abstract them to form a cluster. You also need to deploy/upgrade/monitor your services and share hardware resources effectively. A container orchestrator that can do this is called Kubernetes (Κυβερνήτης in Greek - Helmsman/Pilot in English) [3].

Pods

They are computing slices of your cluster, they will most probably be restricted by CPU and RAM on the container level. Kubernetes will do its best to fit as many pods as possible to your cluster, keep in mind that your pods are not guaranteed to run as soon as you deploy them since the system has to always work within specific constraints.

Headless browser pods

Running a browser reserves some network I/O, CPU and memory from the system. If you add recording capabilities you are further increasing CPU and memory consumption along with an (ephemeral) storage for those videos. Since your pod is stateless you'll also need to copy your created artifacts logs, screenshots, video recordings from your container's ephemeral storage to a persistent storage.

These pods will be communicating with your web server pods.

You might decide to have an external service hitting your test web server (paid or self hosted). This uses the same principles with the main difference that the network adds to the communication delay since you are now probably communicating across different data centres / regions.

Web server pods

For the sake of simplicity let's assume that we have a pod that by definition includes all the stateful services needed for our web server to work. This pod will also reserve resources from our cluster in order to serve our web requests coming from the automated browser running our specs.

Flakyness

A unit test is so isolated that if it flakes surely there's a problem in the code. This test will not communicate with any external services and when it fails the problem is always on the code itself. ou may have inadvertently introduced a source of randomness in your code or have some non deterministic behavior on your business logic based on some relative measure like 3.days.ago. Of course you can fix those problems easily by removing the uncertainty and making the test deterministic.

What if the spec is deterministic? Can it still fail?

Well it depends on the spec level. The closer we are to the unit test the harder it is to appear as flaky and the easier to fix. The more subsystems interact within a spec the more complex it becomes, as it becomes more complex multiple new ways for it to fail form.

An integration spec might hit the cache or the database. Even though we are not using the network layer for our requests the controller level objects will communicate with a lot of services that might be unavailable or slow at the moment of execution. You could use a dummy in memory cache implementation to avoid network calls, you can do the same for your database using a local in-file database but this way you'll be limiting the "integration" part of the spec a bit.

When it comes to E2E specs though, the purpose of it all is to test the actual system in miniature size, you can even run E2E tests against your real production environment to make sure there are no differences from your staging or QA environment after you deploy your beautiful new feature. Now it's certain that we cannot avoid the uncertainty that comes with a massively complex system. How do you make a spec deterministic when the system is stochastic [8]?

At this stage I'll leave you with a large rope and a "nautical knot making" instruction manual...

Let's simply agree that we need to expect some kind of uncertainty overall, that's life. No need for drastic measures.

Counting our weapons

Retries

We could always retry a failing test. Seems naive enough and it could make a test suite complete its run eventually but at what cost?

Max retries

Retry up to x times. We'll need to inspect what's off if the spec fails multiple times consecutively, retrying forever would be wasteful.

Faster infrastructure

Throw money 💰 at the problem. My code is fast and furious both at app and spec level trust me, your machine is slow (yeah sure 😂).

What if we used all of the above and we still get some E2E specs failing?

I run the same E2E spec locally 1 trillion times and it's always green 🟢. I do the same in the CI and still cannot reproduce the error, even though it did fail on the same CI previously on numerous occasions only to succeed after a retry. I'm I crazy?

Coming back to our described system

Consider the Linux kernel process scheduler in its most primitive form. With PIDs coming in to take a small slice of compute time in the processor in a circular queue. Even though all PIDs represent processes not all PIDs are born equal, some will be kings some will be peasants, the former eat most of it while the latter can "eat brioche" [11].

Ok, ok we get the analogy, some pods/containers would be more privileged. But this hardly changes anything, my spec might take a year to finish but it would still be green. Right...?!?!

This might be the case for unit tests, but what if you end up in a situation where you have E2E "expectation timeouts". You want to see the result of your action in the next "x" seconds otherwise the expectation is considered a failure.

Great, my system is stochastic and all my E2E are doomed to fail 😭!

Yes and no. All systems have a steady state along with a measurable variance in their response to a stimulus [12]. A system has finite resources, you can utilize all of the resources until you have no resources left. Everything from then on will be queued and waiting its turn.

Why do I see only some E2E specs failing and not others?

Well, you will have flaky specs that are just poorly written. E2E expectations are not that easy to write compared to simple spec expectations, nevertheless you can fix all of the problematic E2E specs so that they'll perform without using sleep() or some form of black magic while waiting for something to happen.

I did that, now I have only a bunch of specs that keep failing but I can't prove if they are flaky or it's just the CI being slow (even though I pay 1 trillion$ per month to vertically scale 🚀).

The first thing we need to do here is to prove that a spec is flaky and pinpoint the offending expectation. The only way to do this is by reproducing the error locally.

Enter cgroupsV2

Cgroups [1,7] where introduced a long time ago in the Linux kernel but went up in popularity when used at scale by containerization frameworks like Docker ~2013. It's been a long journey since then with cgroupsV2 essentially replacing V1 mostly due to the hierarchical nature that was missing there. You are using cgroups every day without even knowing it, just check systemctl status.

It's worth reading the docs in man cgroups to get a sense of this amazing system. There's also a nice paper doing a "Jails vs Containers comparison" [9] if you are a FreeBSD fan.

We'll be using cgroupsV2 to simulate a ("near death") slow CI in our local environment. Even though the time consumed doing actual work inside the CPU will be the same, the "wall time" [13] will keep going. Not being able to serve a request or create a response in time is not measured in CPU cycles but in seconds passed on the client side.

Under real CI conditions you'll get CPU spikes that will make part of your spec slower, but overall the slowest parts of your spec will be affected more resulting in timeout violations. If we could slow down the execution of the whole scenario we could reproduce the error in the slowest part of it.

# create a new cgroup to be used by our spec suite

#

sudo cgcreate -a "${USER}:${USER}" -t "${USER}:${USER}" -g "cpu:/user.slice/user-${UID}.slice/user@${UID}.service/app.slice/rspec_low_cpu"

# cpu.max="50000 100000" is 50% allocation of the available cpu slice, go as low as you need to reproduce the error

#

cgset -r cpu.max="50000 100000" "/user.slice/user-${UID}.slice/user@${UID}.service/app.slice/rspec_low_cpu"

# run the test in slower than usual cpu conditions

#

cgexec -g "cpu:/user.slice/user-${UID}.slice/user@${UID}.service/app.slice/rspec_low_cpu" rspec ./spec/system/flaky_spec.rbVoila! It took a while to run but we are now happy to face a failing 🔴 test expectation.

What did we prove again?

We proved that a specific expectation is "CI load" dependent or "CPU bound" which is massive. We now have another problem to solve, it's not that our spec is non deterministic it's just that we are not taking into consideration our infrastructure's state when executing the spec.

Options

You can't always optimize the code being called through an E2E spec because it might involve a large percentage of your application, and you also don't want to omit testing this specific action due to its importance. E2E specs are not made to be fast, they are made to cover our applications from top to bottom as a system.

What options do we have then?

- Measure the "time to expect", how much time does this expectation usually take in your CI? Check the outliers (variance), those will most probably be the flaky occurrences. When you have the answers, adjust your expectation timeout accordingly. Prefer to use a multiple of your global E2E expectation timeout instead of providing a fixed value on the spec level. This way when your CI performance changes you'll have to adjust the global value only instead of going through all tests and figuring out a new static one.

- Check if you can make the action executed right before the expectation faster. Let's say you upload and parse one audio file and do some transformations on it: is it possible to achieve the same spec goals with a smaller file?

- Don't feel shame when you automatically retry a failing E2E spec, sometimes it's just OK. Remember though that retrying a spec as a whole will most probably take longer than extending a single expectation's timeout. Also waiting is not consuming more resources from the system while retrying does.

- Make sure your CI is not doing more than it can do. Are you recording your E2E test runs in case of failure? Using

ffmpegcomes at a cost, if screenshots on spec failures are enough maybe disable recordings to save some CPU for actual spec related work. - Container image creation is also something that should be highly optimized. All physical resources in your cluster are shared resources, creating new images can be the "noisy neighbor" even if you are not sharing your cloud infrastructure with other companies. Remember: all test pipelines are running in your cluster simultaneously in different pods but still on the same cluster's hardware.

- Shared cloud infrastructure is cheaper but comes with a cost. Everything will become a roller coaster in terms of performance and you should have that in mind when using it.

- How clogged is your CI pipeline? Running your test suite on every commit is sweet but you'll soon realize that having more engineers will result in overly aggressive usage of the CI resources. Try to kill running test pipelines as soon as a new commit arrives in the feature branch. If your infrastructure is still under stress consider running the test pipeline when a PR has reached a specific state, for example

ready-to-review. - Is it possible to distribute your physical CI resources better? There's always the case of under provisioning, if you have already optimized everything then you might need more workers for the amount of work needed.

- Set an (SRE) error budget on your CI test pipeline run time [2]. This way at least you know where you are and you can search for solutions when the budget is exhausted. Remember the CI pipeline consists of several steps with the test suite being just one of them.

- Measure everything. Hook your pipeline and test suite with monitoring software and have a clear view of what slows you down. It might be 1 spec that takes 99 minutes to finish, it might be that your pipeline caches are always invalidating due to a configuration error and keep regenerating on every run.

Resources

- https://man7.org/linux/man-pages/man7/cgroups.7.html

- https://sre.google/workbook/error-budget-policy/

- https://kubernetes.io/docs/home/

- https://cucumber.io/docs/bdd/

- https://www.cypress.io/

- https://www.redhat.com/en/topics/cloud-native-apps/stateful-vs-stateless

- https://facebookmicrosites.github.io/cgroup2/docs/overview

- https://en.wikipedia.org/wiki/Stochastic_process

- [Jails vs Containers] https://www.diva-portal.org/smash/get/diva2:1453017/FULLTEXT01.pdf

- [C. Robert Silverfin] our fictional "famous quote guy"

- https://www.britannica.com/story/did-marie-antoinette-really-say-let-them-eat-cake

- https://en.wikipedia.org/wiki/Transfer_function

- https://en.wikipedia.org/wiki/Elapsed_real_time